Artificial INtelligence and MAchine LEarning 101 foR professionals IN FINANCIAL AND BANKING SERVICES (PART 4 of 10)

Role of Data in Artificial Intelligence and Machine Learning (For Finance and BAnking)

Introduction

In our previous piece, we delved into the transformative power of AI in automating the credit underwriting process.

How AI Automates Credit Underwriting and Decisioning Processes in Banks

Before that, we explored the foundational principles of Artificial Intelligence and Machine Learning.

Core Concepts of AI & ML for Professionals in Financial and Banking Services

A common theme echoed throughout our series is the crucial role of data in AI and ML. This article aims to further dive into the significance of data within AI applications for financial services. Let’s get started.

Data-Driven Decision Making

Traditionally, finance and banking industries have relied on statistical tools and data visualization techniques to guide their decision-making processes. These methods have provided a solid foundation for understanding trends, patterns, and potential risks.

However, the advent of machine learning algorithms has significantly enhanced the capability to analyze and leverage data, offering deeper insights and more accurate predictions.

Let’s explore how banking and finance data use data in decision-making and how Machine Learning comes into the picture,

Examples of Data-Driven Decision Making from the Banking and Finance Domain

- Credit Scoring and Risk Management: Banks use data from credit reports, transaction histories, and other financial behaviors to assess the creditworthiness of individuals or businesses applying for loans. This approach minimizes the risk of bad debt for the bank while ensuring loans are offered to customers who are most likely to repay them. Machine learning algorithms in risk management use the data from credit reports, transactions, financial behavior, and demography of applicants to predict the likelihood of default, enabling banks to make better lending decisions. The advantage of Machine Learning algorithms is that they can even analyze alternate data like social media interaction, utility bill payments, and educational background to correlate it to the likelihood of default. This way banks can serve a bigger market.

- Fraud Detection: Financial institutions analyze transaction data to identify patterns indicative of fraudulent activities. Machine learning algorithms can monitor banking transactions in real-time and detect anomalies that deviate from a customer’s usual behavior, such as unusually large transfers or transactions in a foreign country. Immediate detection of fraud reduces financial losses for both the bank and its customers and enhances trust in the bank’s security measures. Machine learning algorithms continuously update themselves by learning from new fraud patterns, ensuring they remain effective in detecting fraudulent activities.

- Personalized Banking Services: Banks utilize customer data including transaction history, account balances, and interaction data to offer personalized financial products and advice. Machine learning models can use this data to identify customer needs and predict future financial behavior. This helps banks give out offers and advice that are highly personalized to customer’s financial goals and needs. The strength of machine learning algorithms lies in their ability to be trained on the latest banking product data, enabling them to offer these products immediately.

- Algorithmic Trading: Data-driven algorithms analyze market data to make fast trading decisions. These algorithms can detect trends and patterns in market prices, volumes, and historical data to execute trades at optimal times. This enhances the ability to profit from market movements and reduces the impact of human emotion on trading decisions, leading to potentially higher returns.

- Portfolio Management: Wealth managers and investment firms use data on market trends, economic indicators, and individual asset performance to manage investment portfolios. Data-driven models can suggest the optimal asset allocation to maximize returns while minimizing risk. They can generate tailored investment strategies that align with client’s risk tolerance and financial goals, improving investment outcomes.

- Regulatory Compliance and Reporting: Financial institutions leverage data analysis to ensure compliance with regulatory requirements. This includes monitoring transactions for signs of money laundering or insider trading and ensuring accurate financial reporting. This reduces the risk of regulatory fines and sanctions, while also protecting the integrity of the financial system.

- Customer Segmentation for Marketing: Banks analyze customer data to segment their customer base into distinct groups based on behavior, preferences, and demographic factors. This segmentation is used for targeted marketing campaigns and product development. This increases the effectiveness of marketing efforts, leading to higher conversion rates and more efficient use of marketing resources.

In traditional systems, data is fed into different analytical formulae to derive complex statistical measures. In these systems, data patterns are derived analytically and programmed into algorithms.

However, machine learning algorithms utilize data differently. They use data to learn patterns from them. Let’s explore how this happens.

How do Machine Learning Algorithms use data?

Machine learning algorithms are different because they learn from data to identify patterns, make decisions, and predict outcomes, all without being specifically programmed for each task. This contrasts with traditional statistical or analytical methods, which rely on explicit programming of data patterns to process and analyze data. In essence, machine learning algorithms excel by learning directly from the data, eliminating the need for explicitly programming data values.

ML Algorithms primarily use data for two reasons:

1. To Train a Model. This consists of a carefully crafted historical data set. An example can be the collection of approved and unapproved loan applications along with applicant profiles used to train a model so that it can predict whether to approve a loan application or not

2. To Analyze. This constitutes real-time data that is fed to the Machine Learning model. The model uses this data to make a decision. An example will be a loan application that needs to be analyzed for approval.

Data for Training

When using historical data to train a model, the data is divided into three data sets. First, let’s look at what these data sets are then we will look at an example.

- Training Data: This is the dataset used to train the ML model. It includes both the input features (data points or variables) and the target outcomes (the result we want the model to predict). The training process involves adjusting the model’s parameters to minimize the difference between its predictions and the actual outcomes in the training data.

- Validation Data: This subset of the data is used to tune the model’s hyperparameters (settings on the model that are fixed before the training process begins) and to prevent overfitting (where the model learns the training data too well, including its noise, and performs poorly on unseen data).

- Testing Data: After training and validation, the model is tested on a separate dataset not seen by the model during its training. This step assesses how well the model can generalize its learning to new, unseen data.

What does ML do with data during the training phase?

Feature Extraction: To be able to process data ML algorithms first extract features. Features are individual measurable properties or characteristics of data. These features form the input that the algorithms use to make decisions or predictions.

Here are examples of features feature extraction will work for an ML algorithm for approving loan applications,

- Credit Score Extraction: Extracting the applicant’s credit score from their credit report, which is a numeric representation of the applicant’s creditworthiness.

- Income Verification: Transforming textual or categorical representations of income into a numeric form, for example by extracting and converting reported annual salary into a monthly or weekly figure for consistency.

- Employment History Length: Calculating the length of time the applicant has been with their current employer from the date of hire to the present, converting this into a numeric feature such as the total number of months or years.

- Debt-to-Income Ratio (DTI): Computing the DTI by dividing total monthly debt payments by monthly income, which is a crucial feature indicating the applicant’s ability to manage monthly payments and repay debts.

- Loan Amount Requested: Normalizing the amount of money the applicant is requesting to lend. This can be scaled down to match the range of other numeric features in the dataset.

- Age of Credit History: Extract the age of the applicant’s oldest credit line from the credit report to represent the experience of the applicant with credit.

- Number of Open Credit Lines: Counting the number of currently open credit lines from credit reports, which may include credit cards, loans, and other credit facilities.

- Previous Defaults: Identifying any past defaults or delinquencies reported in the credit report and encoding them as a binary feature (e.g., 1 for defaults present, 0 for no defaults).

- Home Ownership Status: Converting the homeownership status (e.g., rent, own, or mortgage) into a numeric categorical feature using techniques like one-hot encoding.

- Educational Level: Encoding the highest level of education achieved (e.g., high school, bachelor’s, master’s) as a set of binary variables if this information is used in the decision-making process.

These extracted features would then be used by the machine learning algorithm to learn from historical loan application data, identify patterns, and predict whether a new loan application should be approved or not based on the likelihood of the loan being repaid.

Labeling of Data: Labeling is the process of identifying and marking the data with the correct output. This step is crucial because it is from these labels that a supervised machine learning algorithm learns to make predictions. Here’s how labeling is generally done, taking the loan application approval process as an example:

- Define the Label: The first step is to define what the label represents. In loan application approval, the label typically represents whether a loan was paid back or defaulted. This is a binary label, often represented by a ‘1’ for loans that were paid back (positive class) and a ‘0’ for loans that defaulted (negative class).

- Collect Historical Data: Obtain a dataset of past loan applications that have been processed by the bank or financial institution. This dataset should include both the features of each application and the outcome (whether the loan was repaid or defaulted).

- Assign Labels to the Dataset: Go through the historical data and assign a label to each instance (loan application). For example, if a particular loan was repaid, it would be labeled with ‘1’, and if it defaulted, it would be labeled with ‘0’. This historical data with labels is known as the training set.

- Use Domain Experts: Sometimes, the labeling process requires domain expertise. For instance, the reason behind a loan default could be nuanced, and understanding these nuances may be necessary for accurate labeling. Credit officers or financial experts could be involved in this process to ensure labels accurately reflect the outcome.

- Automated Labeling: In cases where the outcome is unambiguous, labeling can be automated. For example, if the dataset includes a column for ‘loan status’ with entries such as ‘paid in full’ or ‘charged off’, a script can automatically assign ‘1’ or ‘0’ labels accordingly.

- Data Cleaning: During the labeling process, it’s also important to clean the data. This involves handling missing values, correcting errors, and ensuring consistency in labeling. Inconsistent or incorrect labels can significantly impair the machine learning model’s performance.

- Quality Assurance: After labeling, it’s vital to perform quality assurance checks on the labeled data. This can involve a subset of the data being reviewed by different team members to ensure the labels are correct.

- Prepare the Final Dataset: Once labeling is complete and quality assurance has been performed, the dataset is finalized. It includes features (inputs) and labels (desired outputs), ready for use in training a machine learning model.

In a real-world setting, especially when dealing with financial data, it’s crucial to ensure that the labeling is accurate, as mistakes in labels can lead to a model that makes incorrect and costly predictions. The integrity of the model’s output is only as good as the quality of the labels in the training data.

Model Building: The algorithm iteratively adjusts its parameters to minimize the difference between its predictions and the actual outcomes (in supervised learning) or to capture best the structure of the data (in unsupervised learning). This process involves optimization techniques to find the best parameters (or weights) for its predictions.

Training set data is fed to the algorithm so it can learn the patterns associated with the outcomes. Fine-tuning is done using different combinations of hyperparameters so that the best-performing model can be used. Once the model is trained and hyperparameters are tuned, its final performance is evaluated on the unseen test set to gauge how it will perform in the real world.

Data for Making Predictions & Decisions

The pre-trained ML model is loaded into an environment where it can quickly access and process incoming data. The model applies its learned algorithms to the incoming features to make predictions or analyses. The predictions or analyses are then used to make decisions, such as approving a loan application or flagging a transaction as fraudulent.

Continuous Learning: If possible, the model’s predictions and the actual outcomes are fed back into the system to allow for continuous learning. In some systems, the model may be updated in real-time as new data arrives, though this is more complex and requires careful handling to avoid model drift.

Simplified Example of How Data Is Used in Credit Scoring ML

Let’s consider a simplified example of how a machine learning model, such as logistic regression, can be used for this purpose. Logistic regression is well-suited for binary classification tasks like determining whether a loan should be approved (yes or no).

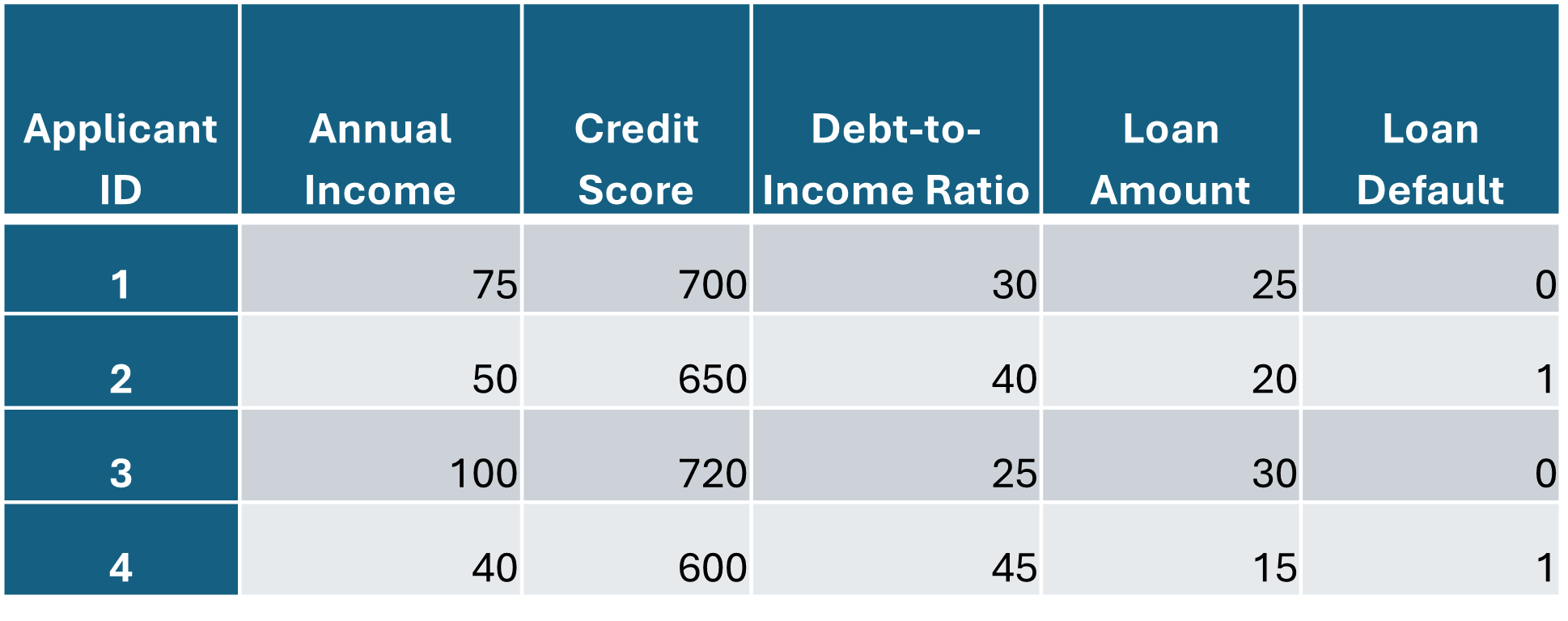

Sample Data

Suppose we have a dataset with information on past loan applicants, including their features (independent variables) and whether they defaulted on a loan (dependent variable). As we have seen above the features might include:

Annual Income (in thousands of dollars)

Credit Score

Debt-to-Income Ratio (percentage)

Loan Amount Requested (in thousands of dollars)

The dependent variable is:

Loan Default (1 for yes, 0 for no)

Here’s a small sample of the dataset:

Logistic Regression Model for Credit Scoring

The objective of the model we want to create is to predict whether a new loan applicant will default on a loan. This is the process that will be followed,

Data Preparation: The data is cleaned and prepared. In this case, it’s already clean and ready for use.

Model Training: The logistic regression model is trained on the dataset. The model learns the relationship between the features (Annual Income, Credit Score, Debt-to-Income Ratio, Loan Amount) and the target variable (Loan Default). Here the dataset is divided into training and testing data.

Model Use: After training, the model can predict the probability of default for new loan applicants based on their financial information.

For simplicity, let’s say our logistic regression model has been trained and we want to predict the likelihood of default for a new applicant with the following information:

Annual Income: $60,000

Credit Score: 680

Debt-to-Income Ratio: 35%

Loan Amount Requested: $18,000

The logistic regression model would use the learned weights (coefficients) for each feature to compute a probability that this applicant will default. If the probability is below a certain threshold (e.g., 0.5), the loan might be approved; otherwise, it might be denied.

This example gives a high-level illustration of how machine learning, through a model like logistic regression, can leverage historical data to create a model that can then decide if a new applicant will default or not.

Different types of data in Machine Learning

Lastly, let’s look at different categories of data used in Machine Learning.

Data can be classified based on its nature and the type of information it represents. Understanding these classifications is crucial for selecting appropriate analysis techniques and machine learning models. The primary classifications of data include:

- Qualitative (Categorical) Data

Qualitative data represents categories or labels that describe attributes or qualities of data points. This type of data is divided into:

- Nominal Data: Data without any natural order or ranking. Examples include colors (red, blue, green), gender (male, female), or types of cuisine (Italian, Mexican, Japanese).

- Ordinal Data: Data that maintains a natural order or ranking but does not quantify the difference between categories. Examples include satisfaction ratings (satisfied, neutral, dissatisfied), education level (high school, bachelor’s, master’s, doctorate), or economic status (low, middle, high income).

- Quantitative (Numerical) Data

Quantitative data represents quantities and can be measured on a numerical scale. It is divided into:

- Discrete Data: Data that can take on a countable number of values. Examples include – number of transactions, account types, credit scores, interest rates on loans, loan approval status, branch location, etc.

- Continuous Data: Data that can take on an infinite number of values within a given range. Examples include duration of loans, loan amount, stock process, etc, height, weight, or the amount of time spent on a task.

- Structured Data

Structured data is highly organized and formatted in a way that is easily searchable by simple, straightforward search engine algorithms or other search operations. It typically resides in relational databases (RDBMS) and can include both qualitative and quantitative data types. Examples include spreadsheets with rows and columns, database tables, or CSV files.

- Unstructured Data

Unstructured data lacks a predefined data model and is not organized in a predefined manner, making it more difficult to collect, process, and analyze. Examples include text documents, social media posts, videos, audio, and images.

Processing unstructured data often requires more complex methods, such as natural language processing (NLP) for text or convolutional neural networks (CNNs) for images.

- Semi-structured Data

Semi-structured data does not reside in relational databases but has some organizational properties that make it easier to analyze than unstructured data. It includes data formats like JSON and XML that contain tags or markers to separate semantic elements and enforce hierarchies of records and fields.

Classification of DATASETS for Machine Learning

For sake of completion we mention again that for creating a machine learning model, 3 types of datasets are important,

Training Data: Used to train the machine learning model.

Validation Data: Used to fine-tune model parameters and avoid overfitting.

Testing Data: Used to evaluate the final model’s performance, providing an unbiased assessment.

CONCLUSION

In this article, we showed how Machine Learning algorithms use historical data to build an ML model and then use that model to make predictions. The difference between ML algorithms and programmable algorithms is that ML algorithms learn patterns present in the data to make predictions, whereas, in a programmable model, you have to code the logic or rules to extract patterns in the data.

With this understanding now you are set to look at different Machine Learning algorithms that are typically used in the finance and banking industry.